Unlocking the Power of Amazon ElastiCache: Boosting Speed and Efficiency for Your Web Applications

In today’s fast-paced digital world, speed is everything. Slow-loading pages and lagging applications can not only frustrate your customers but also lead to lost revenue. This is where Amazon ElastiCache comes in. With its powerful caching solution, ElastiCache helps boost the speed and efficiency of your web applications, providing a seamless user experience.

By utilizing ElastiCache, you can significantly reduce the load on your database, as frequently accessed data is stored in-memory, resulting in quicker response times. This not only improves the overall performance of your applications but also enhances scalability and reduces latency.

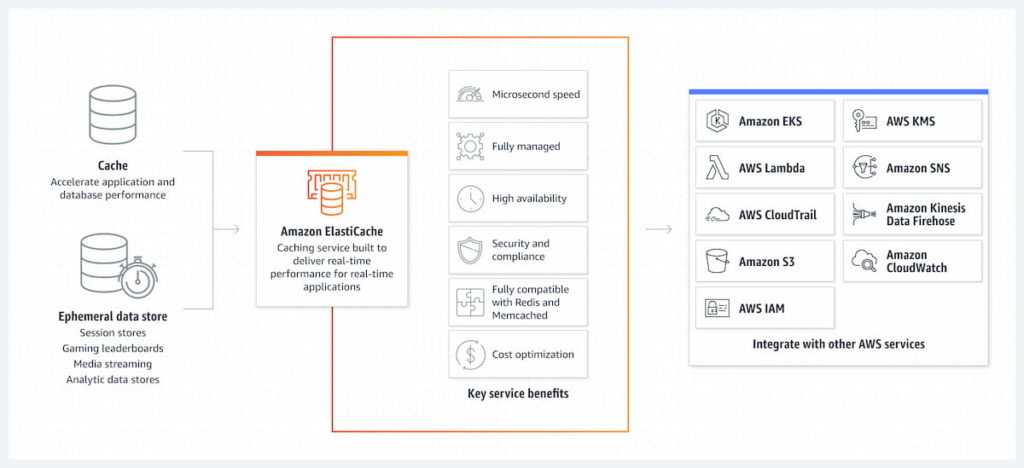

Moreover, ElastiCache is specifically designed to seamlessly integrate with other AWS services, such as Amazon EC2 and Amazon RDS, making it easier to deploy, scale, and manage your applications. With features like automatic backup and recovery, ElastiCache ensures high availability and data durability.

Table of Contents

In this guide, we will explore the ins and outs of Amazon ElastiCache, discussing its key benefits, use cases, and best practices for implementation. Unleash the power of ElastiCache and revolutionize the speed and efficiency of your web applications today.

What is Amazon ElastiCache?

Amazon ElastiCache is a fully managed, in-memory caching service provided by Amazon Web Services (AWS). It allows you to improve the performance of your web applications by caching frequently accessed data in-memory, reducing the need to fetch data from the database every time a request is made.

ElastiCache supports two popular open-source caching engines: Redis and Memcached. Redis is known for its advanced data structures and versatile features, while Memcached is known for its simplicity and high performance. Recently, ElastiCache has started supporting network-optimized C7gn Graviton3-based instances, leveraging the AWS Nitro System for enhanced security and performance.

With ElastiCache, you can create a cache cluster that can scale horizontally, allowing you to handle increasing traffic without compromising performance. This is achieved by adding more cache nodes to the cluster, which distribute the data across multiple nodes, improving both read and write performance.

By utilizing ElastiCache, you can offload the workload from your database, resulting in reduced latency and improved scalability. This is particularly beneficial for applications that experience heavy read traffic, as the frequently accessed data is readily available in the cache, eliminating the need to query the database for every request.

Benefits of Using Amazon ElastiCache

Amazon ElastiCache offers numerous benefits for your web applications. Let’s explore some of the key advantages:

Improved Performance

One of the primary benefits of using ElastiCache is the significant improvement in application performance. By caching frequently accessed data in-memory, ElastiCache reduces the response time of your applications, providing a faster and more efficient user experience. This is especially crucial for applications that deal with high traffic and require real-time data retrieval.

Scalability and Elasticity

ElastiCache allows you to scale your cache cluster horizontally by adding or removing cache nodes based on your application’s demand. This ensures that your application can handle increasing traffic without compromising performance. Additionally, ElastiCache provides auto-discovery and automatic failover, making it easy to scale and maintain high availability.

Reduced Database Load

By caching frequently accessed data in-memory, ElastiCache significantly reduces the load on your database. This means that your database can focus on handling more complex queries and transactions, resulting in improved overall performance. Offloading the workload from the database also reduces the risk of bottlenecks and improves the scalability of your application.

Cost Optimization

With ElastiCache, you can optimize costs by reducing the amount of data read from your database. By caching frequently accessed data, you can minimize the need to query the database, which can result in reduced database costs. Additionally, ElastiCache offers a pay-as-you-go pricing model, allowing you to pay only for the resources you consume.

Seamless Integration with AWS Services

ElastiCache seamlessly integrates with other AWS services, such as Amazon EC2 and Amazon RDS. This integration makes it easier to deploy, scale, and manage your applications. For example, you can easily configure your EC2 instances to use the ElastiCache cluster as their caching layer, enhancing the performance of your applications.

Understanding Caching in Web Applications

To fully grasp the benefits of Amazon ElastiCache, it’s essential to understand how caching works in web applications. Caching is the process of storing frequently accessed data in a cache, which is a temporary storage area that is closer to the application than the database. When a request is made for a particular piece of data, the application first checks the cache. If the data is found in the cache, it is retrieved quickly, avoiding the need to query the database.

Caching is particularly effective for web applications that have repetitive requests for the same data. By storing the data in-memory, caching eliminates the need to fetch it from the database every time, resulting in faster response times and reduced database load. This can be especially beneficial for read-heavy applications, as the frequently accessed data is readily available in the cache, improving overall performance.

There are two main types of caching: client-side caching and server-side caching. Client-side caching involves storing data in the user’s browser, while server-side caching involves storing data on the server. Amazon ElastiCache falls under the category of server-side caching, as it stores the data in-memory on the cache nodes.

How Amazon ElastiCache Works

Amazon ElastiCache is an in-memory key-value store that acts as an intermediary between your application and the data store (database). When your application requests data, it first queries the ElastiCache cache. If the data exists in the cache and is current, ElastiCache returns the data directly to your application. This scenario is known as a cache hit.

However, if the data does not exist in the cache or has expired (a scenario known as a cache miss), the process is as follows:

- Your application requests data from the ElastiCache cache.

- Since the cache does not have the requested data, it returns a null response.

- Your application then requests the data from your data store (database).

- The data store returns the data to your application.

- Your application writes the data received from the store into the ElastiCache cache, so it is available for quicker retrieval the next time it is requested.

This lazy loading approach ensures that data is loaded into the cache only when necessary, optimizing resource usage and maintaining data currency.

ElastiCache supports two popular caching engines: Redis and Memcached. Redis is an in-memory data structure store that offers advanced features such as data persistence, pub/sub messaging, and geospatial indexing. Memcached, on the other hand, is a high-performance, distributed memory object caching system that is known for its simplicity and speed.

Setting up Amazon ElastiCache for Your Web Application

Setting up Amazon ElastiCache for your web application is a straightforward process. Here are the steps to get started:

Step 1: Choose a Caching Engine

The first step is to choose the caching engine that best suits your application’s needs. Amazon ElastiCache supports two popular caching engines: Redis and Memcached. Redis offers advanced features and data structures, while Memcached is known for its simplicity and high performance. Consider the specific requirements of your application and select the caching engine accordingly.

Step 2: Create a Cache Cluster

Once you have chosen the caching engine, you can create a cache cluster. A cache cluster consists of one or more cache nodes, which are individual caching instances. Each cache node runs an instance of the caching engine and stores a portion of the data. Decide on the number and size of cache nodes based on your application’s needs and expected traffic.

Step 3: Configure Security and Access Control

It is crucial to configure security and access control for your ElastiCache cluster. You can use AWS Identity and Access Management (IAM) to control who can access your cluster and what actions they can perform. Additionally, you can enable encryption at rest to secure your data.

Step 4: Configure Your Application to use ElastiCache

To leverage the power of ElastiCache, you need to update your application’s code to use the cache cluster as its caching layer. This involves modifying the code to first check the cache for the requested data. If the data is found in the cache, it is retrieved and returned to the application. If the data is not found in the cache, the application fetches it from the database, stores it in the cache, and then returns it to the user.

Step 5: Test and Monitor Your ElastiCache cluster

After configuring your application to use ElastiCache, it is essential to thoroughly test and monitor your cache cluster. Monitor the cluster’s performance, including metrics such as cache hits, cache misses, and CPU and memory utilization. This will help you identify any performance issues or bottlenecks and optimize your configuration accordingly.

Best practices for optimizing performance with Amazon ElastiCache

To ensure optimal performance with Amazon ElastiCache, consider the following best practices:

Choose the Right Caching Engine and Instance Type

Select the caching engine and instance type based on your application’s specific requirements. Redis offers advanced features and data structures, while Memcached provides simplicity and high performance. Similarly, choose the instance type that aligns with your application’s needs in terms of memory, CPU, and network performance.

Design an Effective Cache Key Strategy

A cache key is used to uniquely identify a piece of data stored in the cache. Designing an effective cache key strategy is crucial for maximizing cache hits and minimizing cache misses. Consider including relevant information in the cache key, such as the unique identifier of the data and any parameters or filters applied to the data.

Implement Cache Eviction Policies

Cache eviction policies determine how long data remains in the cache before it is evicted or invalidated. Implementing appropriate cache eviction policies is essential for managing the cache size and ensuring that only relevant and frequently accessed data is stored in the cache. Consider using TTL or LRU (Least Recently Used) policies to optimize cache utilization.

Use Multi-AZ Deployment for High Availability

To ensure high availability and fault tolerance, consider deploying your ElastiCache cluster across multiple availability zones (AZs). Multi-AZ deployment replicates the data across multiple nodes in different AZs, providing redundancy and ensuring that your cache cluster remains accessible even in the event of a failure.

Monitoring and Troubleshooting Amazon ElastiCache

Monitoring and troubleshooting your Amazon ElastiCache cluster is crucial for maintaining optimal performance and identifying any issues. Amazon ElastiCache provides various tools and features to monitor and troubleshoot your cache cluster.

CloudWatch Metrics

Amazon ElastiCache integrates with Amazon CloudWatch, which provides a range of metrics to monitor the performance of your cache cluster. Monitor metrics such as cache hits, cache misses, CPU utilization, and network throughput to ensure that your cache cluster is performing as expected.

CloudWatch Logs

Amazon ElastiCache also integrates with Amazon CloudWatch Logs, allowing you to collect and monitor logs from your cache cluster. Logs can provide valuable insights into the behavior of your cache cluster and help identify any issues or errors.

Alarm and Notifications

Set up alarms and notifications in Amazon CloudWatch to alert you of any performance issues or anomalies in your cache cluster. Configure alarms based on specific metrics thresholds, such as cache hits or CPU utilization, and receive notifications via email or other notification channels.

ElastiCache Events

ElastiCache Events provide real-time notifications about events that occur in your cache cluster, such as changes in the cluster status or configuration. These events can help you stay informed about any changes or issues in your cache cluster.

ElastiCache Snapshots

ElastiCache allows you to take snapshots of your cache cluster, which capture the state of the cluster at a specific point in time. These snapshots can be used for backup and recovery purposes or for creating new cache clusters with the same data.

Integrating Amazon ElastiCache with other AWS services

Amazon ElastiCache seamlessly integrates with other AWS services, allowing you to enhance the performance and functionality of your applications.

- Amazon EC2: Amazon ElastiCache can be easily integrated with Amazon EC2 instances as their caching layer. By configuring your application to use the ElastiCache cluster, you can significantly improve the performance of your EC2 instances. Additionally, you can use Auto Scaling to automatically add or remove EC2 instances based on the demand of your application.

- Amazon RDS: Integrating Amazon ElastiCache with Amazon RDS can further enhance the performance of your database. By caching frequently accessed data in ElastiCache, you can reduce the load on your RDS database and improve overall application performance. ElastiCache also supports read replicas for Amazon RDS, allowing you to offload read traffic from your primary database.

- AWS Lambda: AWS Lambda functions can benefit from integrating with Amazon ElastiCache. By caching the results of Lambda function invocations, you can reduce the execution time and improve the scalability of your serverless applications. This is particularly useful for functions that have repetitive or computationally expensive operations.

ElastiCache Serverless: Effortless Scaling and Management

Amazon ElastiCache now offers a serverless option, revolutionizing the ease and efficiency of implementing caching solutions. ElastiCache Serverless is designed for simplicity and scalability, catering to the dynamic needs of modern applications. This feature allows customers to add a cache to their applications in under a minute, with the system automatically scaling capacity based on application traffic patterns.

ElastiCache Serverless is ideal for a variety of applications, particularly those with read-heavy workloads like social networking, gaming, media sharing, and Q&A portals, as well as compute-intensive tasks like recommendation engines. By storing frequently accessed data items in memory, ElastiCache Serverless significantly improves latency and throughput, enhancing the overall performance and user experience of these applications.

Conclusion: Unlock the Power of Amazon ElastiCache for Your Web Applications

In today’s digital landscape, Amazon ElastiCache is indispensable, offering speed and seamless experiences by optimizing web application performance through in-memory caching. Supporting Redis and Memcached, it integrates seamlessly with other AWS services, ensuring scalability and swift data retrieval. With robust security, easy setup, and comprehensive monitoring, ElastiCache is a cornerstone for high-performing applications, meeting user expectations and driving organizational success. Unlock its power to elevate your web applications to new heights!